The online world has a problem: harmful content and interactions are growing. At its worst, harmful content and communications are used to incite physical violence, but receiving negative comments online can also be extremely damaging to one’s mental health, sense of self-worth, and overall safety.

That is why, when brands build online communities of their own, guaranteeing your online community safety should be a top priority.

The High Cost Of Harmful Online Content

Harmful online content has countless other impacts. As mentioned above, mental health can suffer when people are exposed to negative content and, in the majority of the cases, this harmful content is targeted at women and minorities. For example, platforms such as Twitter, Facebook, and YouTube all struggle with harmful content despite their significant efforts to counter these trends. Let’s look at a few statistics that illustrate the depth of this challenge.

Social Media Platforms Spend Billions On Content Moderation

In 2021, CNBC reported that Facebook alone is spending billions of dollars on content moderation efforts. Those costs will likely increase because moderators complain about mental health problems and inadequate pay.

The $78 Billion Impact of Fake News

A 2019 report from CHEQ estimated the financial cost of fake news at $78 billion. The report defines fake news as “The deliberate creation and sharing of false and/or manipulated information that is intended to deceive and mislead audiences, either for the purposes of causing harm or for political, personal or financial gain.” Such efforts are hurting democratic governance, eroding trust, and making it more difficult to create positive online communities.

Increasing Government Attention

In the early days, the growth of the Internet was supported by minimal regulation. The impact of harmful online content and increasing concerns about privacy protection are already changing the online landscape. Marketers have already had to adapt their practices in light of Europe’s General Data Protection Regulation (GDPR) and California’s California Consumer Privacy Act (CCPA).

New proposals from the British and Canadian governments further demand regulation of harmful online content. So far, these efforts to target and reduce harmful online content are targeted at a small number of large technology companies. Eventually, those expectations may apply to other businesses like yours.

It’s clear that harmful content is a significant problem. While the problem may be at its worst on large, publicly available social media sites, it is still a concern for other businesses. For example, your company may offer virtual events and virtual conferences to engage your audience, and such events are typically intended to be many people. Therefore, you risk harmful or damaging content undermining your online community safety efforts.

That’s the bad news…. The good news is that the situation is far from hopeless. There are specific steps you can take to engage your audience without risking your online community’s safety.

The Two Main Types of Online Communities

Creating a thriving online community means taking risks. Some of those risks – like new content ideas not connecting with your audience – are worth taking. Other risks, such as your event derailed by harmful content, are different.

Fostering a positively engaged audience takes a variety of strategies. The methods you choose will depend on how you run your online community. For simplicity, let’s consider two examples: a public and a closed community. It is nice to note that an organization may have both kinds of communities for different purposes (i.e., a public community for lead generation vs. a closed community for customers).

- A public online community is a situation where you throw the doors wide open and anybody can enter your event/community. For example, a media brand offering a live chat experience during an election or a championship sporting event might prefer a public event to maximize attendance numbers and potential advertising revenue. The fully open nature of this type of community means a heightened risk of inappropriate content.

- A closed online community is limited in some fashion. This limitation can take a variety of forms. For example, you might charge an admission fee to join your event. Alternatively, your community might be invitation-based (i.e., only your top 100 customers are invited to an exclusive ‘customer council’ community). In contrast to a publicly accessible community, a closed online community will usually have far fewer attendees. The advantages of a closed community include a lower likelihood of disruptive people (i.e., “trolls”) and more common ground between participants.

Growing a Thriving Online Community: Essential Tools for Online Community Safety

Fostering a thriving and safe online community requires multiple strategies. Here are some effective and straightforward strategies to help you achieve it.

Leverage Technology To Filter Inappropriate Content

Arena offers a profanity filter that makes it easy to prevent the most common forms of inappropriate communication. Using this capability makes it much easier to create an environment safe for your audience. We’ll explore this capability in further detail below.

Establish Simple Community Guidelines

Aside from straightforward situations like using profanity, there is some disagreement about what counts as appropriate vs. inappropriate in the online world. For example, some communities love to debate and be passionate about sharing their opinions – which can be either wonderful or hurtful! Another approach is to create and use simple rules and ask all community participants to follow them.

For example, your online community might take inspiration from Reddit communities that often use rules such as: don’t be a jerk, promote self-promotion, keep discussions on topic, and moderators reserve the right to intervene.

Add Barriers To Entry For Your Community

Adding barriers to entry, such as requiring a user to register for an account or pay an admission fee, are an effective way to discourage disruptive behavior. Alternatively, your barrier to entry might be based on limited awareness – like only inviting people on your email list to join the event.

Optional: Role Play With Your Event Staff

For organizations planning to host events with a large number of attendees or a large number of events, additional training through role play is helpful. In this case, ask two to three employees to serve as event staff and ask 5-10 employees to take the role of participants. Secretly ask a few of the employee-participant to act disruptive and notice how this situation is handled.

Training your employees and encouraging positive behavior in your audience are some of the most powerful ways to maintain a safe community. Unfortunately, these strategies take time to develop. They are worth developing, but it is also vital to offer your community a certain baseline level of safety. That’s where Arena’s content moderation capabilities make a big difference.

Five Ways To Build A Safe Online Community with Arena

By using Arena Live Chat, you have several options to build a safe community. Each organization will use these options differ depending on its values and community needs. For the best results, invite your moderation team to become familiar with these tools.

1) Use The Profanity Filter

Reducing profanity is a key quick win to creating a safer online community. There is a built-in profanity list of commonly banned words. You can review, edit and update this list based on your needs. Once the filter detects a banned word, you can choose whether to replace the word with a series of asterisks (***) or block the user. Blocking users who use profanity may be wise in situations where the live chat includes children or controversial topics.

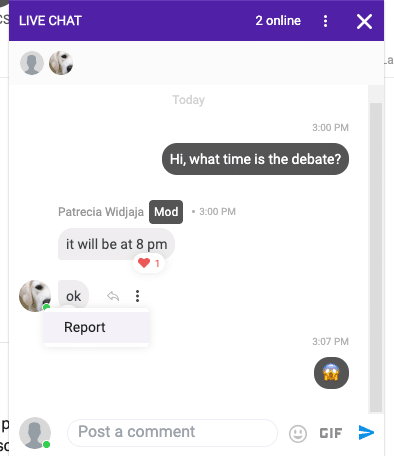

2) Review Reported Users

Arena Live Chat lets chat users report another user for inappropriate activities (e.g. inappropriate direct messages etc). During the live chat, review the reported users every few minutes and do a quick investigation. If the infraction is minor, you may decide to send a warning message to the user and delete their message. For more serious violations, see the next option.

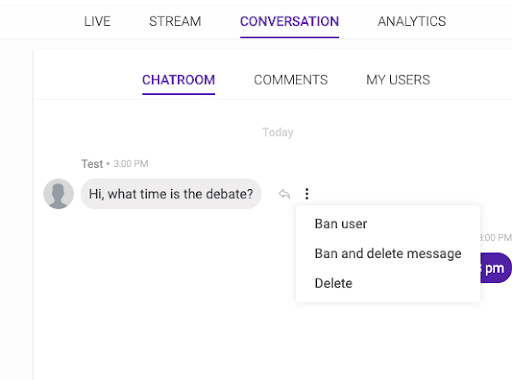

3) Ban User and Delete Message

In some environments – especially public live chats with a large number of users – you may encounter highly inappropriate content (e.g. sexist, racist, or other violations of your policies). If these violations are ignored, it can embolden disruptive users. That’s why it is helpful to use the “Ban user and delete message” capability. Arena saves banned users in the organization’s dashboard so there’s less chance of suffering disruption in the future.

4) Delete Message

This moderation feature is a good choice for borderline inappropriate content. For example, you might choose to delete a message (rather than banning the user) if some users engage in self-promotion assuming your guidelines discourage that activity. Likewise, the delete message capability might also be useful when you see a rise in off-topic discussions.

What if you end up deleting a significant number of messages? Large live chat sessions that are fully open to the public tend to face this risk. Reinforcing your code of conduct expectations for the event can help. If problems persist, you may wish to consider using the pre-moderation feature to further control the flow of discussion.

5) Use The Pre-Moderation Feature To Maximize Brand Safety

Traditionally, a live chat experience emphasizes live interaction between users. Usually, it is best to encourage a free flow of conversation. Yet there are some situations where it’s appropriate to intervene more heavily by using pre-moderation. In essence, pre-moderation means that each message has to be manually approved by moderators before it shows up in the live chat window.

There are pros and cons to using pre-moderation in your live chat. The advantages are there you can filter out almost all inappropriate chat content. There are downsides though – moderator review of each message slows down the pace of the conversation significantly. As a result, your audience may become disengaged if they are kept waiting too long. The second disadvantage is that pre-moderation requires more effort from your team.

Conclusion

Maintaining a safer online community requires ongoing effort and the right tools. With Arena’s advanced moderation and engagement features, you can create a secure and welcoming environment for all users. Find out more about Arena’s social communities solution.