One of the benefits of working with online platforms and social media channels is that your audience gets direct access to your brand. While likes and shares help you improve brand awareness, comments are where your community can really thrive. Through comment sections, users can share their feedback, participate in threads, and interact with others, making it essential to follow content moderation best practices to keep these interactions positive and productive.

Enabling comments on your website is crucial to foster engagement and community-building, but it can also present challenges in ensuring a safe and respectful environment. This is where effective content moderation comes into play.

In this guide, we’ll explore six of the best content moderation practices to help you keep your comment section a positive and welcoming environment.

What is Content Moderation?

Content moderation is the process of evaluating and managing content, normally generated by users, on platforms such as social media channels, websites, or blogs. This verification process ensures that user-generated content follows community guidelines, filtering out harmful, inappropriate, misleading, or offensive content, including hate speech, harassment, misinformation, or illegal material.

Effective user-generated content moderation is the foundation of maintaining healthy, engaging, and respectful online communities. There are several ways to ensure moderation quality, such as human moderation, specialized software, and AI tools. Let’s understand how each of these works.

Types of Content Moderation

1. Manual Moderation

This refers to the process of having a team of human moderators review and evaluate content. It can happen before or after the content is published, depending on the kind of software you are working with and how advanced its filter options are.

One of the greatest advantages of having a dedicated team to carefully examine user-generated content is that human moderators are able to understand nuance, context, and cultural sensitivities in a way automated systems often can’t. This leads to more accurate and empathetic moderation decisions, making users feel like their opinions matter and are being acknowledged by the team.

Manual moderation can be particularly helpful for smaller brands that don’t have a high user activity or those who face budgetary constraints. However, it can be time-consuming and may cause delays in publishing, as every piece of content needs to be reviewed manually.

2. AI Moderation

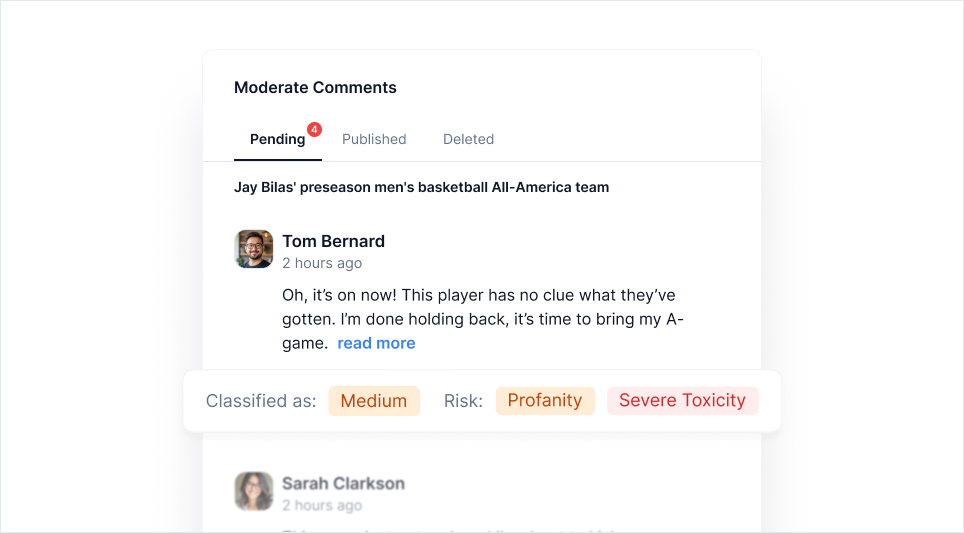

Artificial intelligence moderation, also known as automated moderation, leverages AI to scan, analyze, and filter based on predefined criteria. Since it significantly reduces the need for human intervention, this method is faster and can process large amounts of content, either before or after it is published.

However, it is important to continuously update the tool to improve refinement and accuracy. Additionally, while working exclusively with automated tools is cost-effective, AI might mistakenly flag legitimate content as inappropriate (false positives) or fail to detect harmful content (false negatives). To prevent such errors, supplementing AI moderation with human oversight is the most effective strategy.

3. Hybrid Moderation

This approach combines the best of both worlds: AI’s capacity for processing large amounts of content and a human moderator’s attention to detail.

Implementing hybrid moderation can enhance efficiency by allowing AI to handle routine tasks and pre-filter comments, while human moderators focus on complex cases. Additionally, AI can learn from human decisions and improve its accuracy over time.

Hybrid moderation is an ideal approach for businesses that are aiming to scale their content efforts while still maintaining quality, control, and accountability.

Content Moderation Best Practices

Implementing an effective content moderation process helps maintain your community as a safe and welcoming space for users. To prevent potential issues, consider implementing these six moderation best practices:

1. Establish Community Guidelines

Defining clear, detailed community guidelines is the first step towards effective content moderation. This set of rules must clearly state what behaviors are acceptable, setting expectations for your users who choose to express their opinions on your website’s comment section.

Cover essential elements, including:

- Personal information sharing restrictions

- Staff naming policies

- Language standards

- Rules about external links and advertising

- Policies on defamatory content, intolerance, discrimination, and bullying

Ensure these guidelines are easily accessible to your community members by integrating them into your navigation menu, website footer, and user registration process.

Remember that establishing guidelines is only part of the equation. Outlining consequences for violations, such as comment removal, account suspension, and even permanent banning policies, is equally important.

Try to frame your community guidelines positively where possible. Instead of just listing prohibited behaviors, encourage constructive conversation and highlight what makes a valuable contribution to the community.

2. Implement a Comprehensive Moderation Strategy

As we’ve discussed before, there are several types of content moderation, and each brand must assess its options to understand what works best for their website.

However, adopting a hybrid moderation approach – also known as a multi-layered moderation strategy – is highly recommended for websites aiming to scale their content efforts effectively.

By combining AI and human moderation, you can handle large volumes of comments while ensuring proper context and nuance in moderation decisions.

Your multi-layered moderation strategy should include:

- Automated Moderation: Use AI and machine learning tools to pre-filter and screen content, identifying potential violations such as spam, prohibited words, and inappropriate content.

- Human Moderation: You can choose to let AI automatically approve pre-screened comments, with human moderators stepping in only for more complex cases. Alternatively, AI can first filter the content, and then human moderators review everything before it goes live. Human oversight ensures that legitimate content isn’t incorrectly removed while truly problematic content doesn’t slip through automated filters.

- User Reporting Systems: Besides working with AI and human agents, you can include your community in the moderation process by empowering them to flag inappropriate content. When a comment is flagged by users, it can be automatically routed to human moderators if it meets certain risk thresholds or handled by automated systems for clear violations.

This layered approach creates an effective moderation system that can scale with your community while maintaining high standards of content quality. The key is to let each layer handle what it does best: automated systems for speed and scale, human moderators for nuance and context, and community reporting for additional oversight.

3. Develop a Structured Response System

A tiered system of actions and consequences helps you maintain consistency and fairness when handling comment violations. Your response system should match the severity of the violation while allowing room for both rehabilitation and escalation. Consider implementing the following tiered approach:

Minor Infractions: For off-topic comments or mild language violations:

- Issue a warning message explaining the specific guideline violation.

- For repeated minor violations, implement temporary commenting restrictions, such as a 24-hour cooling-off period.

Moderate Violations: For personal attacks or spam:

- Remove the offensive content.

- Suspend the user’s commenting privileges for a longer period.

- Clearly communicate why the action was taken and the steps to regain commenting privileges.

Severe Violations: For serious issues like hate speech, harassment, or repeated moderate violations:

- Implement permanent bans.

- Document the decision-making process and maintain records of the violation history.

Remember to apply these consequences consistently across all users and be transparent about your actions. Consistent and transparent enforcement builds trust in your moderation system and helps prevent accusations of bias or unfair treatment.

4. Nurture a Positive Community Engagement

You can cultivate a constructive commenting environment by implementing proactive strategies that encourage community engagement. Start by creating a welcoming atmosphere through active staff participation. Have your team initiate discussions and respond regularly to user comments.

Another good idea is to implement a recognition system to reward positive contributors. Here are some effective ways to do that:

- Feature “Top Commenter” badges for users who consistently provide thoughtful contributions

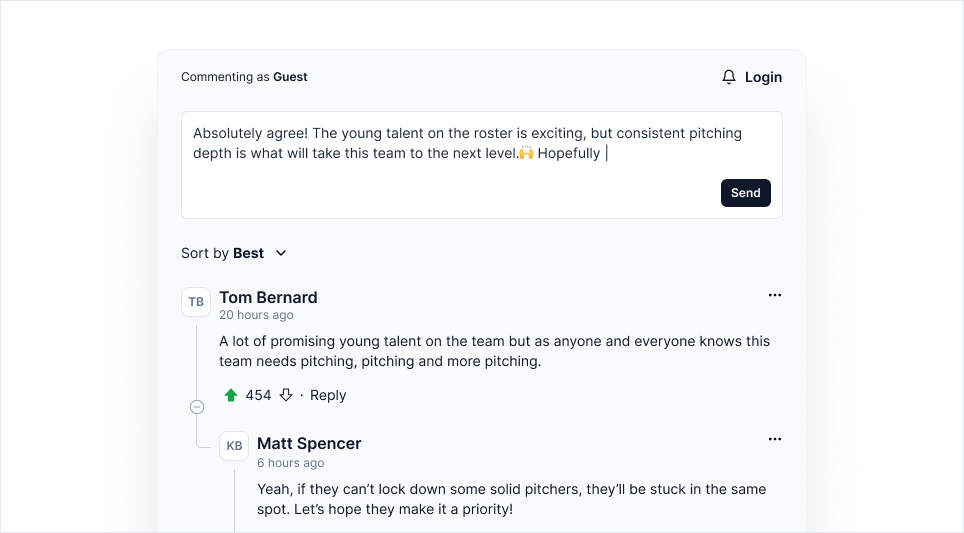

- Implement an upvoting (and downvoting) system to let the community highlight valuable comments

- Create a “Verified Commenter” program that gives trusted users the ability to post without moderation delays

- Showcase the best comments at the top of the discussion section

- Reference insightful user comments in future content, showing that you value community input

By employing these audience engagement strategies, you can easily encourage participation and enhance the overall user experience.

Take The New York Times’ approach as an example. Their “Verified Commenter” program has successfully accelerated discussion engagement while maintaining quality. You can also implement a tiered system where regular contributors earn increasing privileges, such as the ability to post links or use rich text formatting.

Remember that your brand should actively participate in discussions as well, asking follow-up questions, conducting polls on the comments and even acknowledging particularly insightful contributions. Implementing these engagement strategies demonstrates that you value the community’s input and encourages others to maintain high standards in their participation.

5. Train and Support Your Moderation Team

As your community evolves, so should your moderation process. Both AI and human moderators require continuous training to improve accuracy and effectiveness.

Human moderators should be trained to recognize policy violations, interpret cultural nuances, and stay alert to emerging trends in harmful content. At the same time, AI tools must be continuously updated with fresh datasets to improve their accuracy in filtering inappropriate material.

Here are some suggestions on how you can continuously evolve your moderation process:

- Provide Comprehensive Training

Cover your community guidelines in depth, including how to recognize different types of problematic content and handle grey areas.

- Establish Decision-Making Frameworks

Keep moderators updated on emerging trends and challenges through regular training sessions. Teach them to maintain neutrality during conflicts and model desired behavior.

- Ensure Consistency in Enforcement

- Establish clear escalation procedures for complex cases.

- Hold regular team meetings to align moderation decisions and share experiences.

- Protect Agents’ Mental Health

Implement a rotation system to avoid prolonged exposure to disturbing content.

- Provide access to counseling services.

- Mandate regular breaks.

- Foster an environment where moderators feel comfortable discussing challenges and seeking support.

6. Monitor and Adapt Moderation Practices

Regularly assess and adapt your moderation process by adding a few steps to your team’s routine. Consider implementing the following:

- Track Key Metrics

Monitor comment and user-generated content volume, flag rates, removal frequency, and response times to identify trends and potential issues early. Implementing user engagement monitoring helps in staying informed about community interactions. By tracking metrics such as engagement rate, impressions, click-through rate, and conversion rate, you gain insights into how content resonates with your audience. Tools like Hootsuite Analytics and Google Analytics are recommended for more detailed insights.

- Adjust Moderation Intensity

- Increase moderation for highly emotional or politically contested topics.

- Maintain lighter oversight for low-risk discussions.

- Review and Update Guidelines Whenever Necessary

- Periodically review community guidelines.

- Adjust policies to address recurring problems.

- Implement additional measures if patterns of issues arise (e.g., increased spam during certain hours).

- Keep an Open Communication Channel

- Clearly inform your community about any policy updates.

- Maintain transparency to build trust and ensure everyone understands current expectations.

- Keep your moderation team aligned on updated policies through regular training and documentation.

Applying these online community safety tips can help maintain a safe and welcoming environment for all users.

Keep your Community Safe with Effective Content Moderation

As digital interactions expand and evolve, content moderation strategies must adapt to stay effective, relevant, and respectful of today’s global online communities.

Effective user-generated content moderation, such as comments, requires a balance of clear community guidelines, fast technological support, AI automation, and human judgment. Remember, successful moderation involves more than removing inappropriate content; it helps nurture a space where your community can flourish.

Looking to boost engagement while keeping your site safe? Try Arena Comment System and unlock powerful features that drive interaction and retention.